The previous blog in this series discussed how Britive’s access broker helps secure users and service access to any Kubernetes cluster. In this blog, we will look at a practical example of securing a GCP Anthos cluster running on an Amazon EC2 instance behind a virtual private cloud (VPC).

We would leverage Google’s fleet management functionality to set the cluster up and configure the identity provider. Google provides two (2) ways to authenticate and access the Anthos clusters. The first is to leverage GCP identity and with the right roles and permissions to access the cluster. The second is by connecting directly to the cluster.

Britive supports policy-based just-in-time access for both options. This blog will explore the latter, allowing us to keep uniform access patterns and efficient access management across all managed clusters in an environment.

Setup

Here are the high-level steps we will go through:

- As an admin establish a federation trust relationship between Britive and the cluster. As part of the configuration, we will identify the right scopes (attributes) the cluster must use to determine the identity and its group membership (access).

- Set up Britive profiles to grant policy-based access to the users.

- As an end-user leverage kubectl to access the cluster, with Britive providing the short-lived tokens as a form of authorization.

Federation

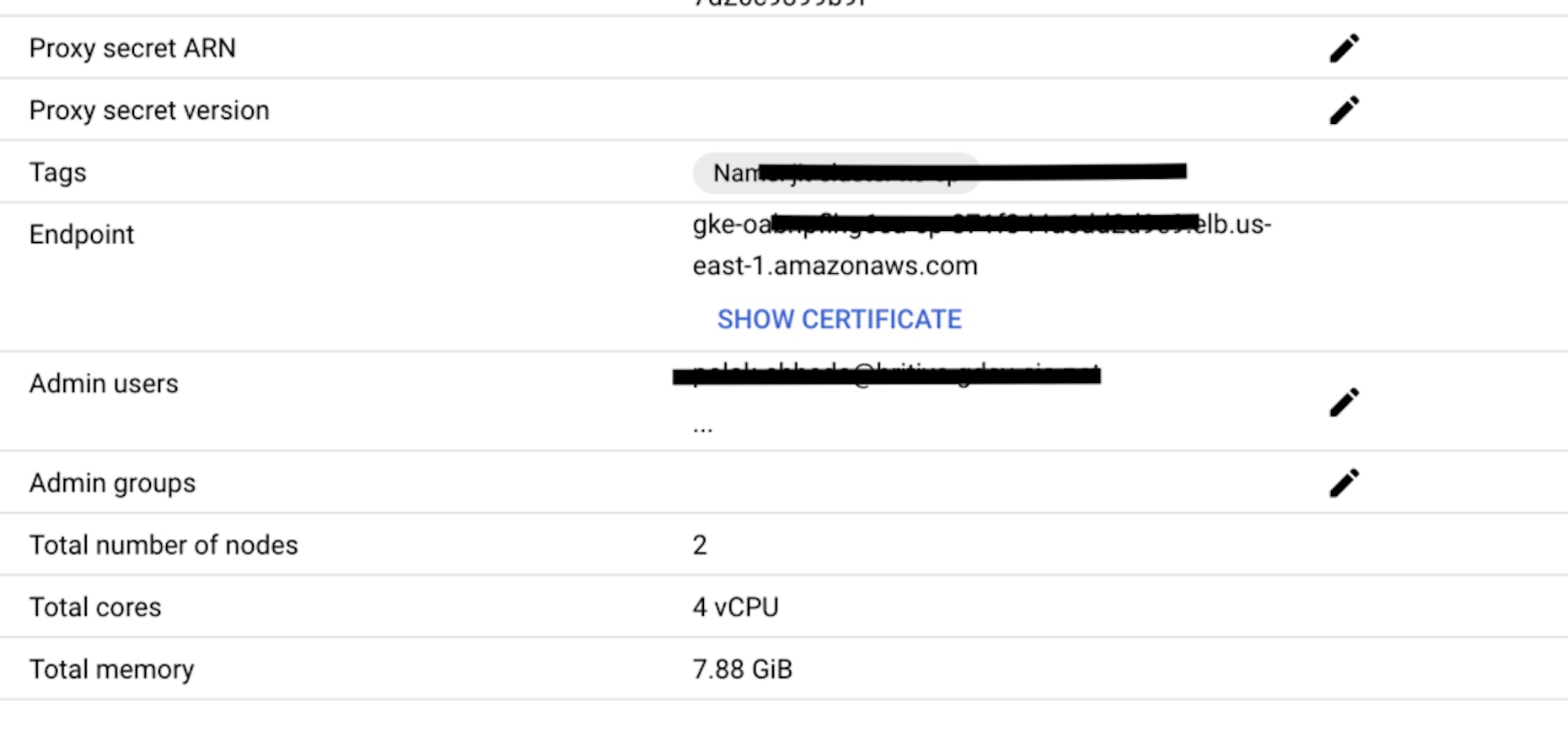

- From the cluster details page fetch the Endpoint URL and the certificate.

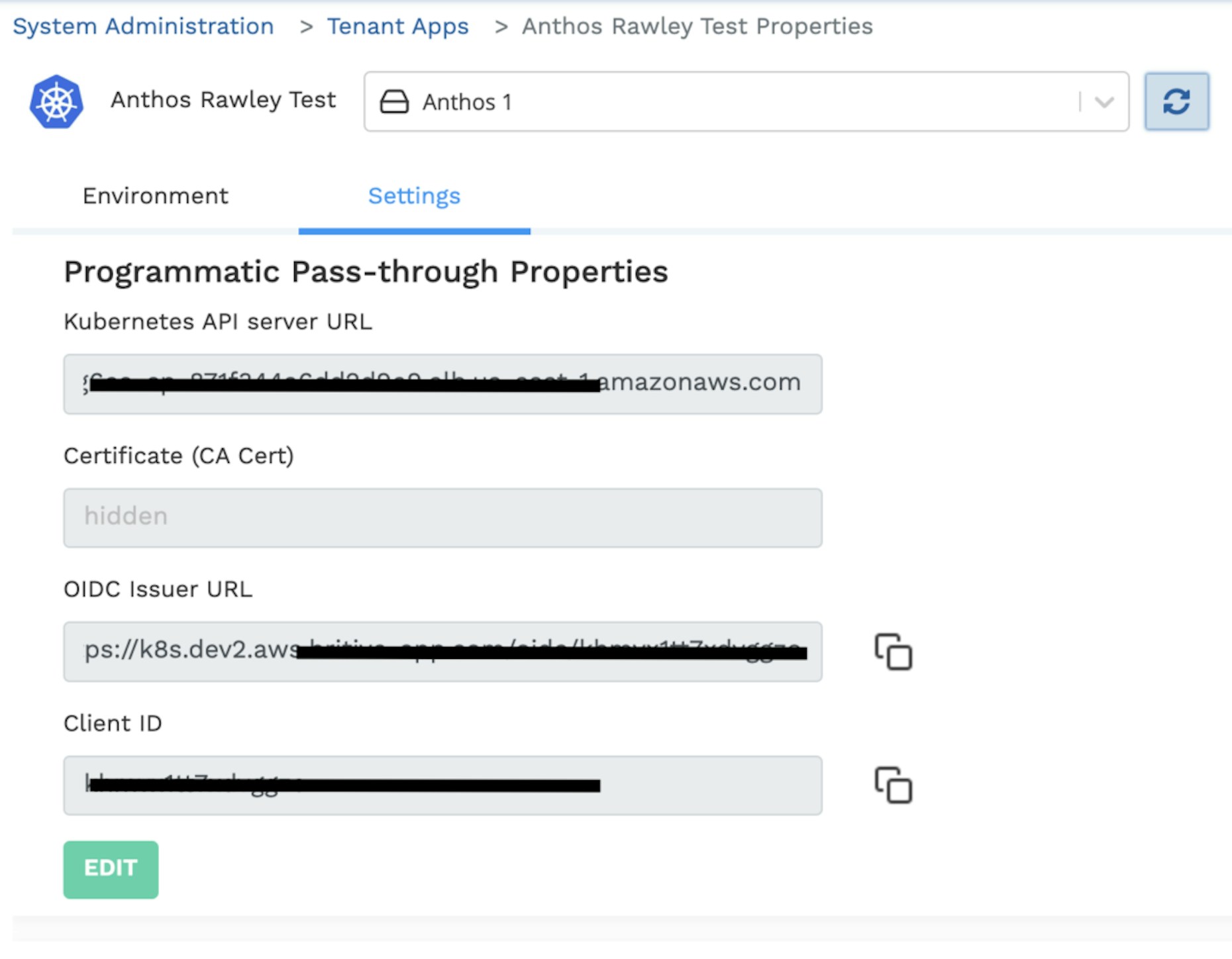

- Provide the cluster API Server endpoint and the certificate as shown below. The Endpoint from the previous step will serve as the Kubernetes API server URL and the certificate must be encoded to BASE64 before entering it into the Britive application configuration.

- Britive will generate the unique OIDC Issuer UR and Client ID for the cluster and make it available on this page.

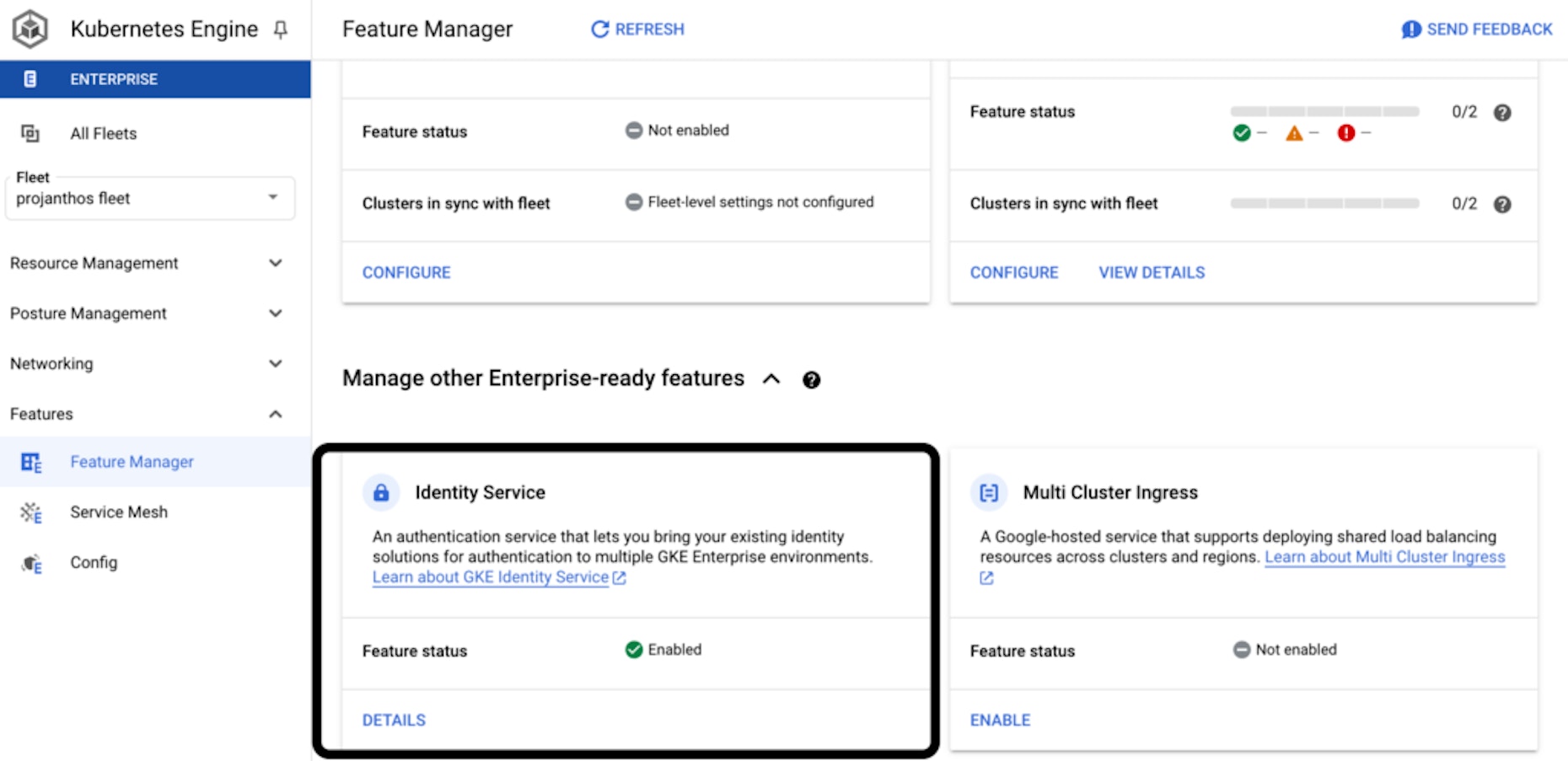

Now, navigate to the Feature Manager for Anthos Fleet. And add a new identity service called Britive.

- When configuring the Identity provider, ensure the following attributes and mapping are configured. Group Claim: groups Scopes: openid,profile,offline_access User Claim: sub Extra Params: prompt=consent

- Next, run the following commands in the CLI (bash or cloudshell) to download the relevant configuration as a yaml file and apply it to the cluster. Run these commands as a GCP admin on the project.

This completes the federation connection.

Cluster Configuration

Now, let's create a few test components within the cluster: a namespace and roles and role bindings scoped to the said namespace. You can skip this step if you already have an identified namespace and the role bindings you would like to grant access to via Britive.

Create a new namespace and new role and bindings with the help of a yaml file shown below.

kubectl create namespace jit kubectl apply -f roles.yaml rolebindings.yaml# role.yaml kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: jit name: jit-admin rules: - apiGroups: ["*"] resources: ["*"] verbs: ["*"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: jit name: pod-reader rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["get", "watch", "list"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: jit name: ns-manager rules: - apiGroups: [""] # "" indicates the core API group resources: ["*"] verbs: ["*"]# rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 # You need to already have a Role named "pod-reader" in that namespace. kind: RoleBinding metadata: name: jit-admin-binding namespace: jit subjects: # You can specify more than one "subject" - kind: Group name: jit-admins # "name" is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: # "roleRef" specifies the binding to a Role / ClusterRole kind: Role #this must be Role or ClusterRole name: jit-admin # this must match the name of the Role or ClusterRole you wish to bind to apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 # You need to already have a Role named "pod-reader" in that namespace. kind: RoleBinding metadata: name: nsmanager-binding namespace: jit subjects: # You can specify more than one "subject" - kind: Group name: ns-managers # "name" is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: # "roleRef" specifies the binding to a Role / ClusterRole kind: Role #this must be Role or ClusterRole name: ns-manager # this must match the name of the Role or ClusterRole you wish to bind to apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 # You need to already have a Role named "pod-reader" in that namespace. kind: RoleBinding metadata: name: pod-reader-binding namespace: jit subjects: # You can specify more than one "subject" - kind: Group name: developers # "name" is case sensitive apiGroup: rbac.authorization.k8s.io roleRef: # "roleRef" specifies the binding to a Role / ClusterRole kind: Role #this must be Role or ClusterRole name: pod-reader # this must match the name of the Role or ClusterRole you wish to bind to apiGroup: rbac.authorization.k8s.ioBritive Configuration

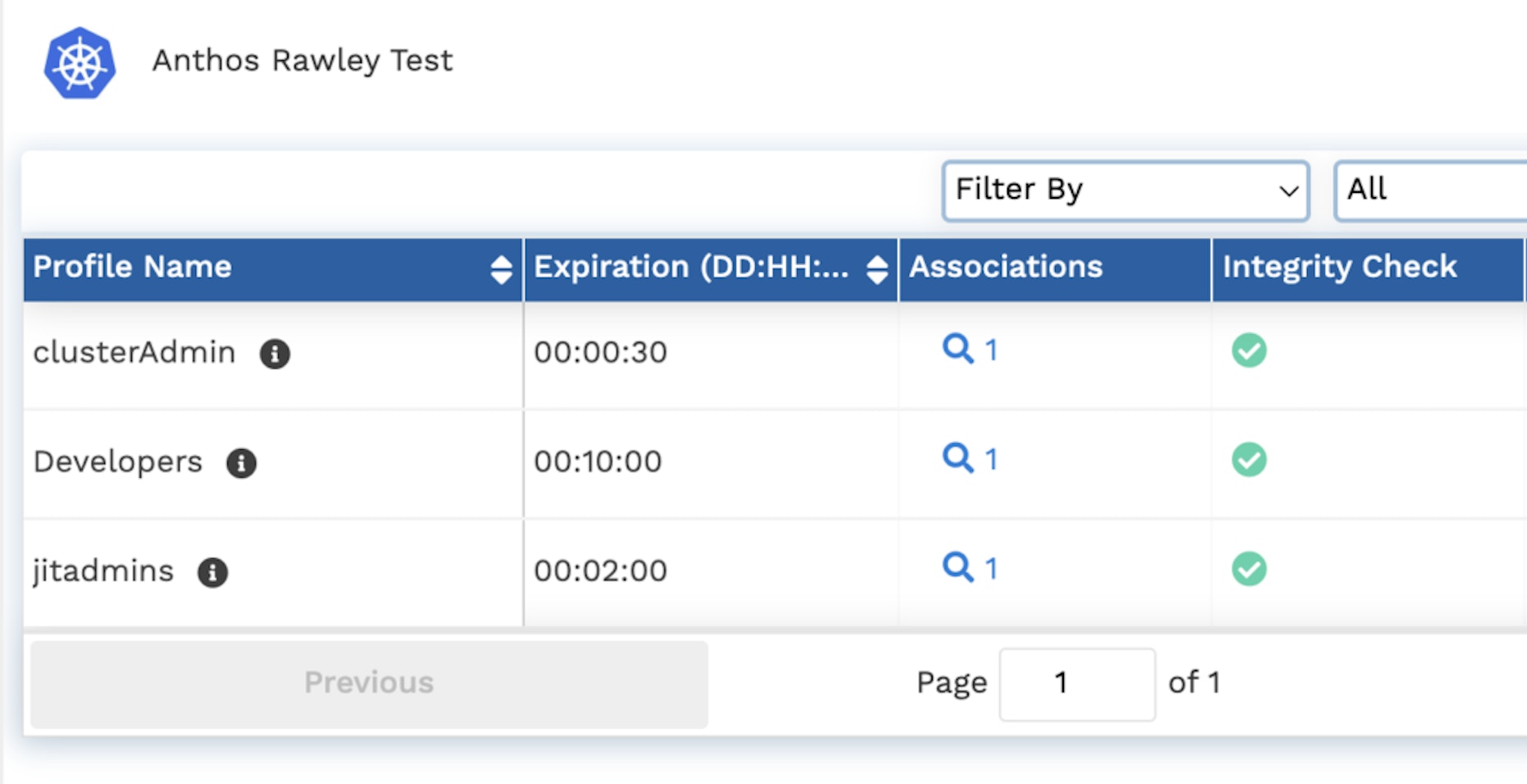

We will create matching profiles for each of the groups mapped with the role bindings. The configured profiles would look something like the following. Each profile is configured with unique expiration times and access policies. Learn more about Britive profiles and how to create them.

User Experience

Since we configured the cluster within a VPC, we’ll connect to a Bastion host and login to Britive via the CLI. Once logged in, the Pybritive CLI will create the necessary kubeconfig contexts (Entries) for the logged-in user. A user session is depicted below. The user can access the cluster with the namespace role binding for developers and is unable to access resources outside of the namespace.

Conclusion

The Britive platform along with the PyBritive CLI utilities helps with automatic dynamic privileges for any form of Kubernetes cluster. The token generated by each profile contains just enough access to carry out the operation within the matching context. The use of PyBritive commands to fetch the token is transparent to the end user while they interact with the cluster via kubectl, k9s, or any other similar tool.

Ready to see how Britive can help you secure your access to Anthos enterprise clusters? Learn more about Britive’s Kubernetes integration, or reach out to a member of our team to schedule time for a demo.